How effective is your online training? The fundamentals to assess e-learning impact.

The migration of training to the digital and online worlds has been given a helpful push by the coronavirus lockdowns.

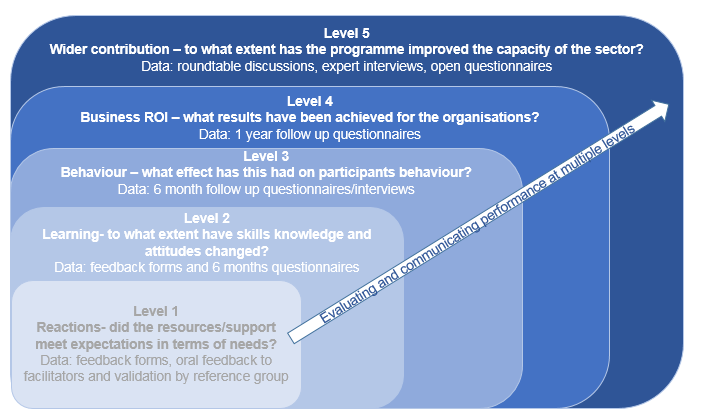

It is no longer just an opportunity for educators, but an imperative. So here are five levels of impact to build into the evaluation of any learning and development program, and ideas on how to put them into practice for an edtech course.

The breakdown of learning evaluation into levels comes from well-known theorists in the education space. A general trend across their models is that they move from the micro (immediate, individual changes) towards outcomes at the macro level (longer-term and for more stakeholders). In this sense, they are similar to a Theory of Change diagram which moves from short-term to long-term outcomes.

Some of the key thinkers guiding practice include Donald Kirkpatrick (1959), Kaufman, Keller and Watkins (1995) and Robert Brinkerhoff (2006). Below, I summarise their thinking into five levels (building on Kirkpatrick).

If you cover all these areas, you should be able to tell whether your new online training is expensive digital clutter, making your learners cry “not another platform to download?!”, or a game-changing edtech solution that shakes up whole sectors.

Remember, your evaluation does not have to cover all five levels. Pitch your evaluation level based on the audience for the report and the size of the course. That said, Levels 1 and 2 should be achievable for almost all trainers.

Level 1: Reactions

This level is about whether the content met the expectations of students in terms of design, delivery and experience of the course.

A national training provider once approached us with data from a classic feedback form. It included questions like “how would you rate …the trainer’s presentation? …the length of the course? ...the exercises?” culminating with “I am happy I attended this course – Yes/ No?”.

Our contact inherited the program, and when a funder asked for an impact report, she believed her feedback data would hold all the answers. She is not alone in this belief. Most people are familiar with seeing a form like this at the end of training (if anything at all) and unthinkingly assume that learning effectiveness is being captured.

That is not to say these forms of useless. Feedback forms like my clients’ do work for a very simple evaluation. They provide Level 1 information by assessing both sentiment (whether your learners enjoyed the experience) and feedback on the delivery method (whether the content is engaging). This will be important as you roll out a new digital format as the experience may be new to both the provider and the learner.

To gather data at Level 1 a simple end of course feedback form should suffice. Plug it into the last page of your course before users are invited to close a platform, or send it immediately by email to maximise return rate.

If you are working in a smaller setting, perhaps with a small class on, why not gather quotes from oral feedback. You could simply by asking for direct reactions at the end of class (of course this could be biased if it is led by the trainer).

If you are running a larger course, consider setting up a reference group or cohort. This could be representative sample of your learners, or a group of experienced trainers that can provide qualitative feedback through independent sessions or interviews.

Level 2 – Learning

Level 2 is about the achievement of learning outcomes, such as an increase in skills and knowledge in a topic.

It is tempting to believe that just because you delivered a killer course, with an engaging, interactive online platform, that you must have increased knowledge.

At my workplace we have an annual web-based training in professional independence. It is an essential course for ensuring that professionals in the firm do not (intentionally or unintentionally) take advantage of sensitive client information. And hats off to the company, they update the design of the course each year with videos, puzzles, case-studies and bite-size delivery of information.

The thing is, many people have worked in the firm so long, that they click through the content as fast as they can. You need to mark the whole course as complete and then pass a test. The old hands can pass the test without engaging in the course because its their business to know this stuff inside-out.

So, has there been any learning? For a segment of learners this is unlikely because they were able to pass the test just as well before embarking on the training, as after. This could be the same for your course, or perhaps someone had technical challenges or learning difficulties. You will never know until you check.

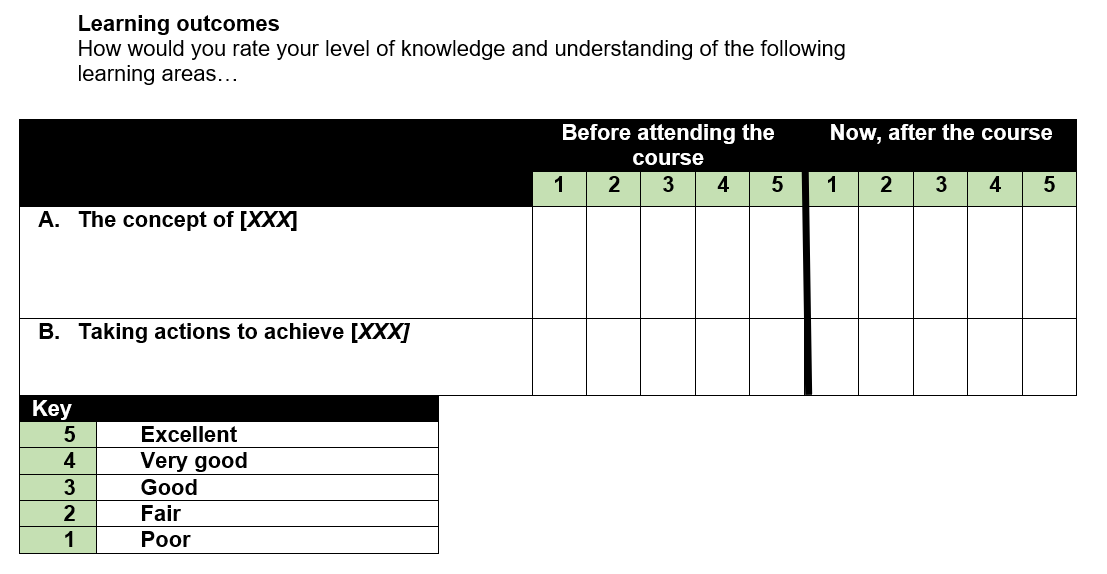

To gather data on Level 2, you need to assess the level of knowledge, skill or awareness on a topic prior to the student undertaking the course (a baseline). You then need to assess their level again at the end of the course.

Ideally this would be a test taken at the start and then compared to results on a very similar test at the end of the course to compare scores. This is a water tight approach (assuming your test accurately assesses the learning outcomes). However, this can be hard to implement practically as time is often precious on courses, and continual testing can cause fatigue.

The other option is to include some self-report. This could include self-report on the baseline level of knowledge. For example, in some circumstances you can simply ask a learner if they are a complete novice. It gets a little tricker if you want to define an intermediate level, for example one person’s idea of conversational Spanish might be very different to another’s.

Finally, you can ask people to self-report their pre and post knowledge on the topics you covered retrospectively using a simple scale. This would look a little like the following:

Level 3 – Behaviour

This level means testing whether your content led to any application of the learning.

Application of the learning means using the skills and knowledge gained from the course to do something different. It might be changing a workplace habit after attending a time-management course, or building a new software plug-in if someone attended a coding course.

Behaviour change is notoriously difficult to measure in social impact assessments. This is because it is often delayed, and traditionally social impact research has relied centrally on self-report measures which can easily be biased.

To gather data on Level 3, it is desirable to introduce a follow-up survey or measure. This means monitoring the behavioural (and learning) impact till or at an appropriate period after the course. This could be 2 weeks if your course had learnings which were simple to apply, or for a larger course, maybe six months.

Ideally you will be able to combine objective measures (observable changes) with subjective reports (opinion-based data). For example, if your course covered pro-environmental habits or lifestyle changes, you might ask people only for objective data by monitoring their energy or water bills, or weighing their landfill rubbish.

If the anticipated behaviour changes are complex and variable, you may prefer to compose some simple self-report scales, or even ask people to share case-studies through open questions. Remember, qualitative data can be more reliable than quantitative data if the numbers are meaningless.

Level 4 – Business Return on Investment

This level examines the business benefits to the course provider. Benefits may be direct income or the achievement of strategic objectives. The returns can be considered based on whether your course intended for internal audiences or for external attendees.

If your online course is open to external attenders, strategic objectives may include building the organisation’s reputation, or to strengthen partnerships.

The success criteria for internal courses may include outcomes identified at Levels 2 and 3 because the expectation is that you are building organizational capability. However, they may also include medium-term outcomes like employee engagement and/or efficiency.

To gather data on Level 4 you will probably be looking at a variety of indirect sources. For external courses, marketing data can be used by cross comparing attendees’ names with subsequent purchases, referrals or sign-ups. Phone interviews and the follow-up survey could also be used to gather changed sentiment on the organisation.

For internal courses, you may look at year-on-year employee engagement surveys, or specific productivity measures based on the organization and function. Remember you need baseline and follow-up data and some consideration of causality (more to follow on this in a later post).

Level 5 – Wider contribution

The final level examines the impact the course has created on the capacity of the sector you are serving, and wider society.

This level is similar to the long-term outcomes in a Theory of Change. It expresses the broader contribution your training is making. For example, an online course in playing the guitar may lead to greater appreciation and health of the music sector. An internal training in data protection, might contribute towards greater safety of personal data and therefore more trust in institutions.

Gathering data on your specific contribution to these high-level outcomes may be challenging. Larger, sector level studies looking at the role of online courses could help. For example, we recently worked with a national sustainability initiative. We were able to explore the collective contribution of both online and offline training run by over 500 organisations. The study explored whether understanding, values and behaviours had shifted from a variety of online and offline courses and the success factors which made individual courses most effective.

In the absence of useful larger studies, you may like to support the evidence base by hosting a round table discussion on the role of online courses, or by interviewing experts. You could also add an open-ended question into your data gathering tools for Levels 1 and 2.

Final thought: the fundamentals to measure online training effectiveness

The glowing novelty of technology and the dizzying array of platforms to try, should not be a distraction from the ultimate test of course effectiveness: the achievement of outcomes.

Edtech is giving traditional classroom settings a good run for their money. However, initial reactions like engagement and entertainment are still given a primal importance by most course providers. So in proving the value of your training make sure you are reaching beyond Level 1, at least to Level 2, to demonstrate the impact of your education offer.